Portfolio: AI Projects

Zip Co: The Oracle Chat-Bot

Back when I was working as a Project Manager at Zip in early-mid 2021 (pre-ChatGPT), I had just secured access to GPT-3 through a friend. I was brainstorming uses and came up with an idea to create a chat bot that could be added to the company Slack system at Zip.

I did my research on how to fire off webhooks to external systems from within a Slack channel or DM, and setup a webhook pointing at an "HTTP endpoint" / "Debug node" pair that I created within a NodeRED instance (fyi: NodeRED is a Javascript-based low code programming environment that can be self-hosted).

This simple setup allowed me to send Slack messages to a channel or to DM them to a user I created specifically for my experiment, and then observe the message content of each HTTP webhook request that was being fired from Slack to my NodeRED instance.

Once I understood the structure of the request and how to respond to it from within NodeRED, I was able to quickly throw together some conditional statements in NodeRED that would reply with a unique message that was dependent upon the message that was originally received from Slack.

I named the Bot user in Slack "The Oracle" and then began to play around with the GPT-3 Playground - engineering different prompts that I thought might be useful within a corporate environment - as well as learning the structure/schema of the GPT-3 API.

Once I had arrived on a few good prompts, I added them to the NodeRED instance and used conditional key word / phrase recognition on the incoming message in order to determine which prompt to route the message through.

Some of the things that "The Oracle" could do included - (i) Solving problems; (ii) Writing Haikus; (iii) Writing User Stories; (iv) Returning a mock Product Interview for a defined Product idea; and much much more.

Finally - I added one last integration into Zip's Jira workspace in order to give the chat bot the ability to answer questions about agile projects that were in progress, and also played around with fine-tuning the GPT-3 model with Questions and Answers about the history of the company.

At the time that I did all of this - only a few people in the "in crowd" knew about Generative AI and Large Language Models (as ChatGPT was not yet released) - so when I made it available to my colleagues they thought it was magic!

Personal: Circl.AI

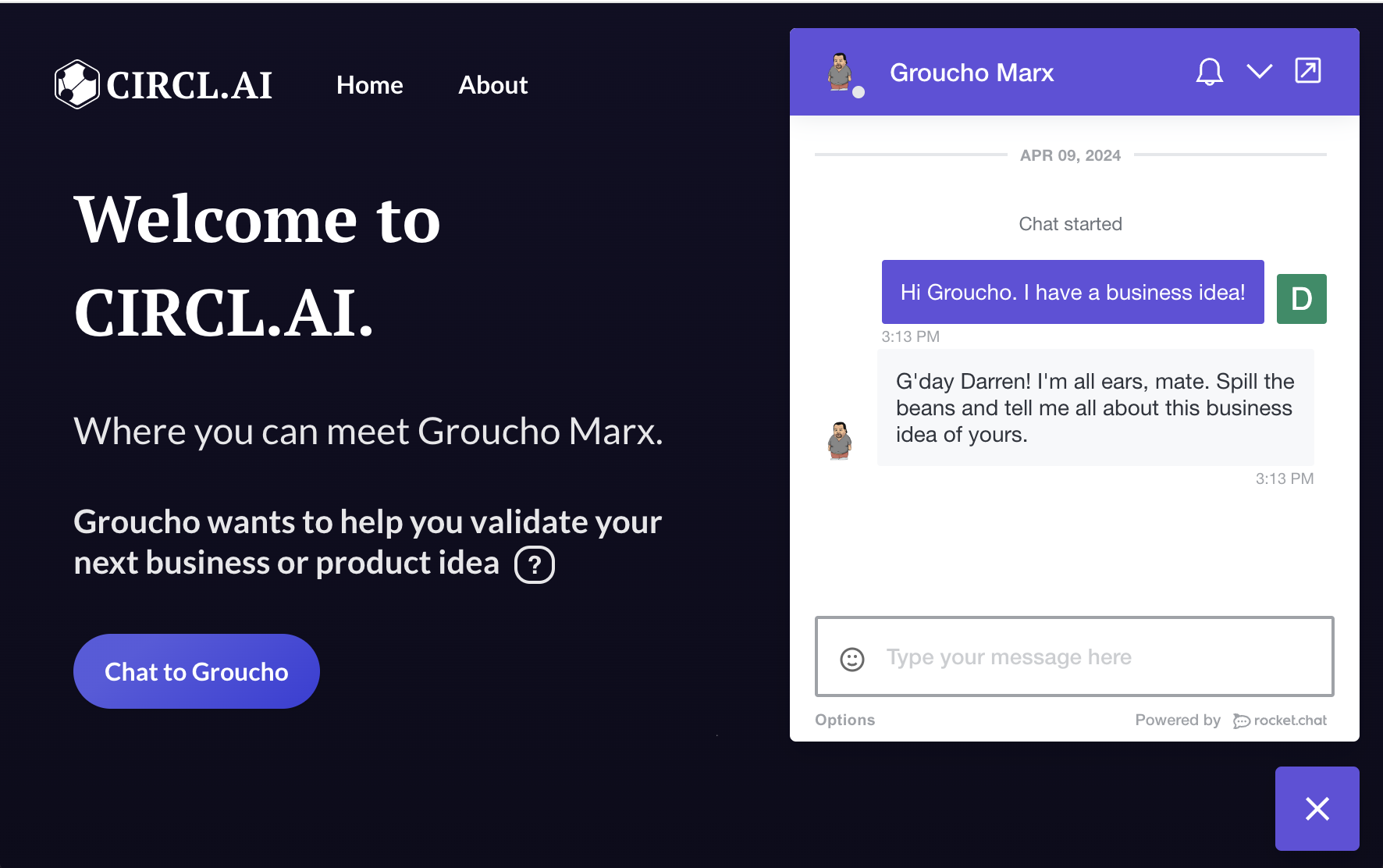

One personal project that I have been working on is Circl.Ai. Feel free to click the link to check it out for free!

The target market for Circl.Ai is Product Managers within corporates and Enterpreneurs. And the premise is that you explain a business or product idea that you have to an AI Persona named Groucho and he will work with you to help you form and validate it so that you can find product-market fit without having to invest significant time or money.

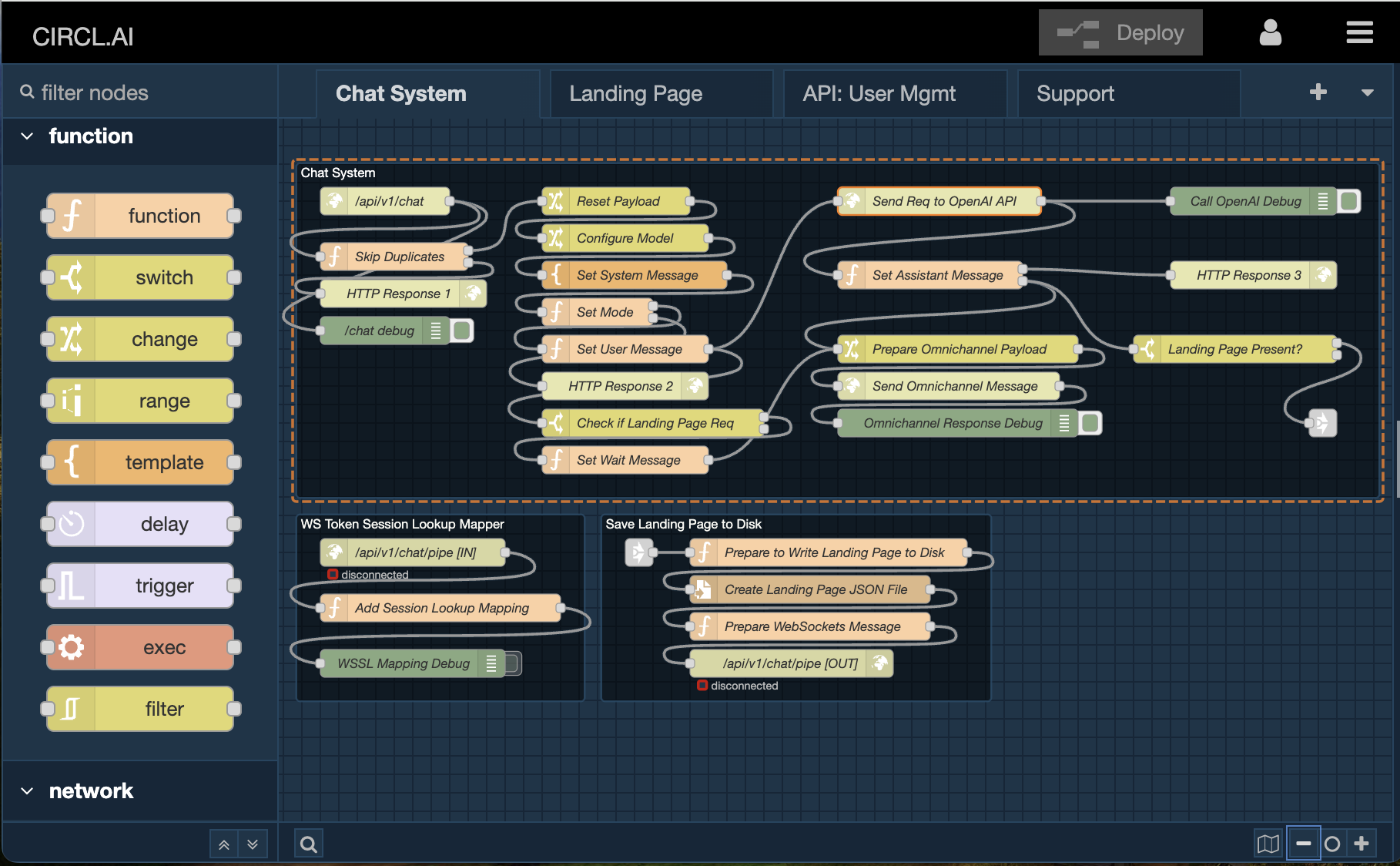

Circl.Ai is currently comprised of ~3 interconnected sub-systems - (i) A self-hosted NodeRED instance that contains all static HTML as well as an API / middleware containing the business logic for the product; (ii) A self-hosted instance of Rocket Chat - an open source alternative to Slack; and (iii) the GPT-4 API which is hosted by OpenAI.

The NodeRED instance contains a collection of proprietary LLM prompts that have been engineered to - Guide an end-user through the process of idea validation; and to Generate a landing page for the end-user that is designed to optimise demand.

RocketChat is not used directly persay, but rather it's Omnichannel LiveChat JS Widget is embedded in the Circl.Ai homepage - with this being the interface that the end-user utilises to validate their idea.

Development is ongoing - so if you would like to know what is up and coming then simply click the "About" link in the header of the Circl.Ai homepage.

Personal: IndustrySwarm Genesis

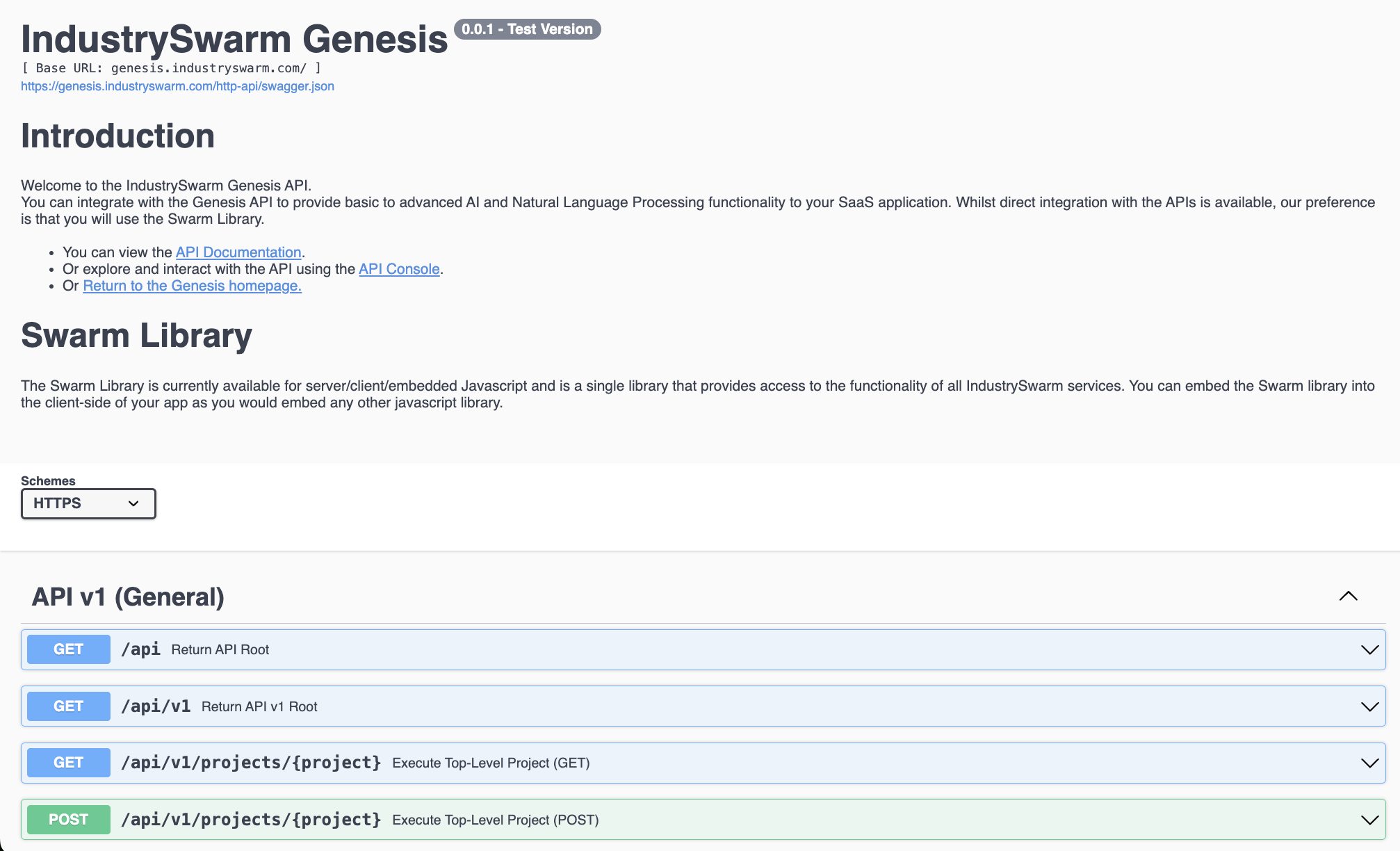

Another personal project that I have been working on is the IndustrySwarm Genesis Platform. Although development on this project halted years ago.

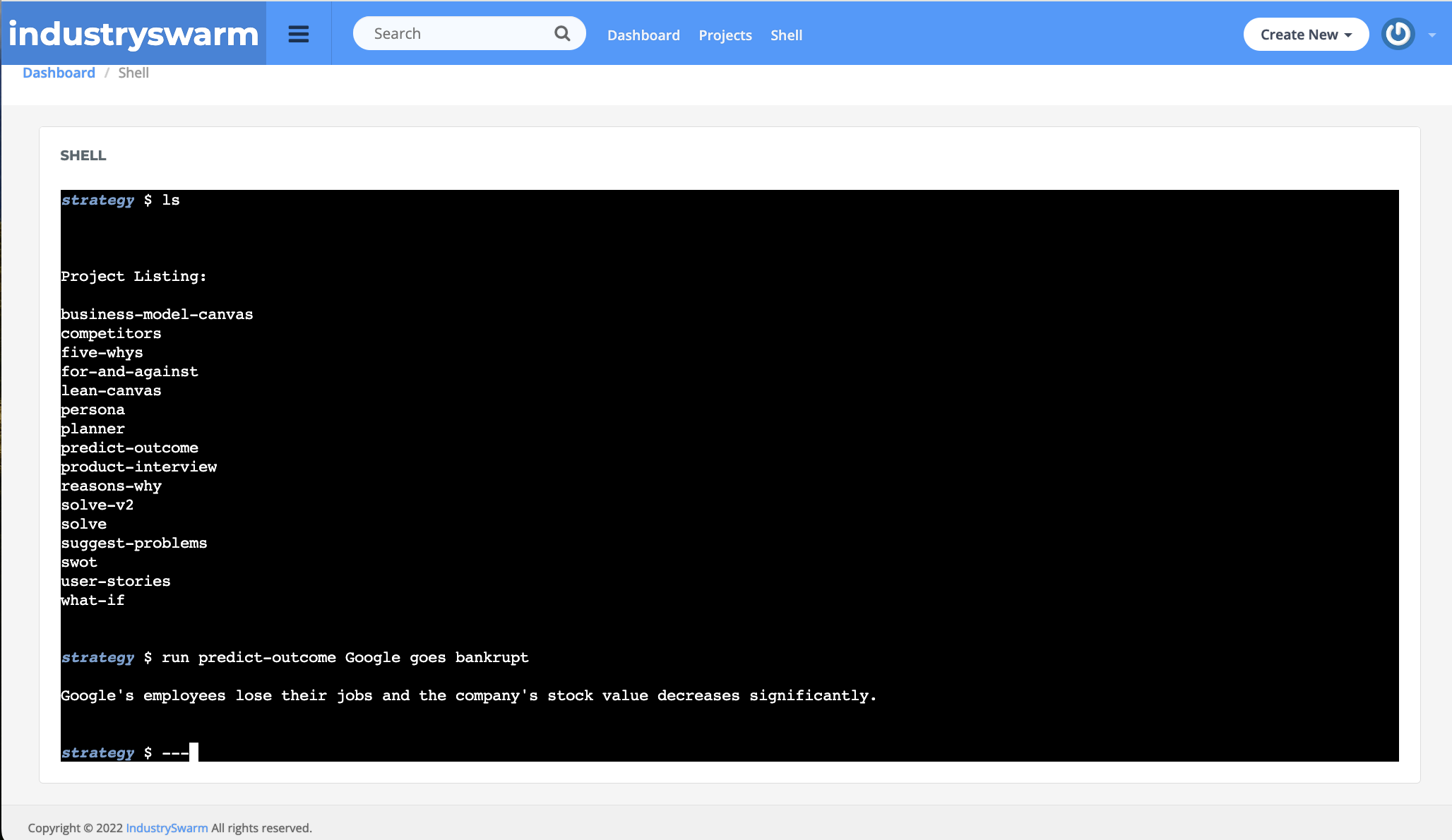

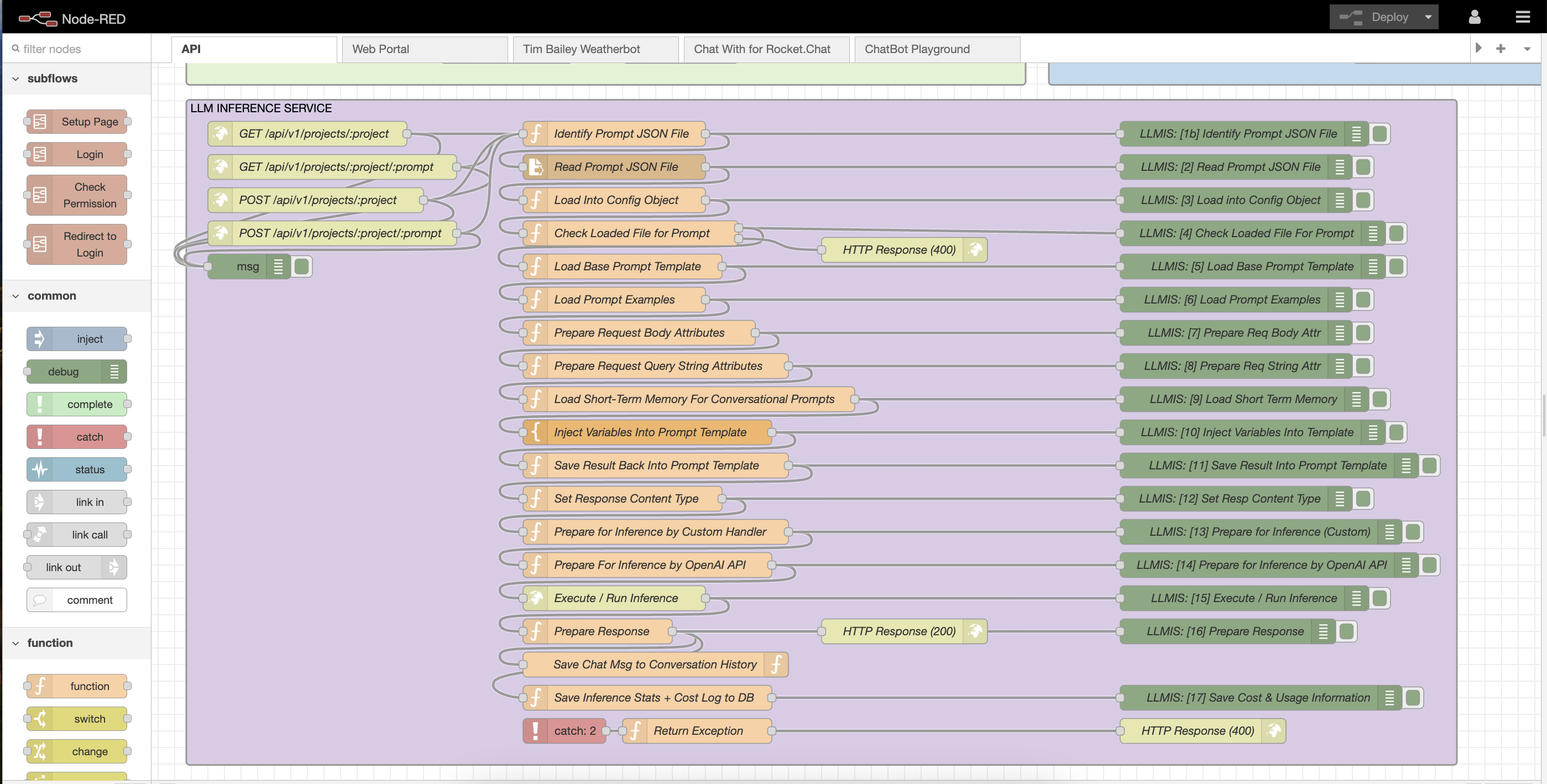

Simply put, this is a Large Language Model (LLM) Inference Server. It contains a moderately sized collection of prompt files for various different use cases, and uses the OpenAI GPT-3-Turbo-Instruct model to process them. These use cases can be accessed via the Genesis API (click for more info) or Genesis Shell (also click for more info)

The shell is the easiest way to get started. Simply click the link in the previous paragraph to access it and when asked enter "castle" as the secret word and hit enter. Once this is done and you are in the Shell you can use simple unix commands to navigate. Such as "ls" to get a list of projects or collections in the current folder, and "cd [collectionName]" to open a folder, or collection of prompts.

Once you have found a prompt that interests you, you can run it by typing "run [promptName] [input]", where [input] is what you want to pass into the prompt. For example to get a SWOT matrix for Google, type - "ls"; then "cd strategy"; then "ls"; then "run swot Google". The output will be rendered within the shell.

There are other commands that you can execute apart from "run". These are "describe [promptName]" to get a plain text description of what the prompt in question does, "export [promptName]" to open a new tab with the underlying prompt JSON file, or "api [promptName]" to open up an API to access that prompt in a new tab. The prompt JSON file format is proprietary and was built specifically for Genesis.

Outside of simple "run once" prompts there are also interactive prompts within the "chat", "fun" and "simulations" folders. For example if you type "cd chat" and then "run chat-with Ronald McDonald" then you open up a back-and-forth conversation with the star of MacDonalds.

Personal: AGI Prototype Service

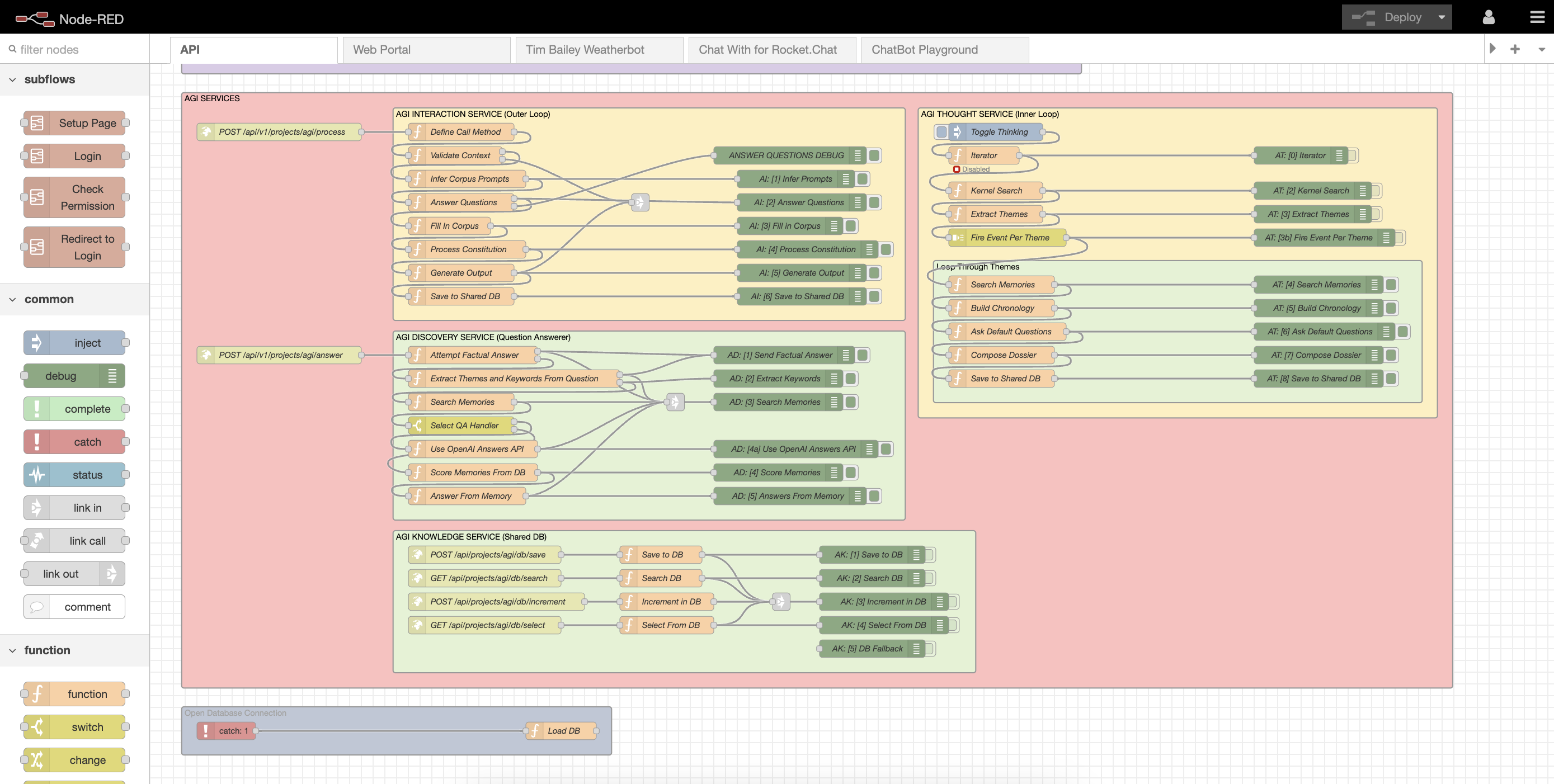

Another small AI project that I worked on and incorporated into the Genesis platform was the AGI Prototype Service. It is a Javascript (NodeRED) based service, much like my others, that was reverse engineered from the Python-Based NLCA (Natural Langauge Coginitive Architecture) Discord Bot by David Shapiro.

It is comprised of four microservices - the Interaction Service, Discovery Service, Knowledge Service and Thought Service. The Interaction service provides a chat-based interface that can be accessed via the Genesis Shell. When a chat message is sent to this service, a prompt is executed that extracts any questions from the original chat message and sends these to the Discovery Service to be answered. The Discovery Service firstly attempts to answer any question that is factual directly. If the question instead is not factual but rather refers to previous conversation then the Discovery Service will call the Knowledge Service - which is effectively a shared database of all conversations that have been had.

In the background, the Thought Service (or Inner Loop) is executed according to a schedule - and is responsible for introspection or reflection over previous conversations in order to compile dossiers that are stored in the Shared DB (or Knowledge Service) which are used for future interaction / conversation.

Freelancer: AI Strategy

Back when I was working as a Senior Product Manager at Freelancer.com I was tasked with the responsibility of setting the companies AI Strategy. This involved firstly interviewing all of the product managers within the business about their respective products and the pain points that their customers had. And then attempting to identify whether there were any opportunities to solve any of these pain points with OpenAI's GPT-3.

I ended up capturing a wide range of problems and pain points and identifying a multitude of potential applications of the GPT-3 LLM. Before looking to implement any solution or create any prototypes, I firstly built an LLM Inference Platform called "The Singularity Platform" that was similar to the IndustrySwarm Genesis product that I had previously created, but intended for use solely within the Freelancer business.

In addition to simplifying the process of prompt development, deployment and management, the platform also provided rapid prototyping functionality. This was done by creating new pages (with their own URLs) on the platform that proxied existing web content from the Freelancer.com website and our other products and then used server-side JQuery in the form of the Cheerio library to extract data from the pages, route it to a prototype module which itself utilised the inference server, and then inject new or amended content back into the page before serving it to the end user.

One example of an application that I discovered, and that utilised the prototype and inference functions of the Singularity platform was the "Project SEO Tool". When an end-user requested a project page from Freelancer.com, this tool would extract the project description and then use a prompt on the inference service to extract relevant key words and phrases from that description. It then sent these to the Google Trends API to convert them into new phrases that ranked highly in Google. Before compiling these back into relevant content that was injected back into the project page, as "juice" for Google.

This is just one example of the large collection of opportunities to use AI to optimise Freelancer group products that we identified and worked on.

Personal: Tim Bailey Weather Chat-Bot

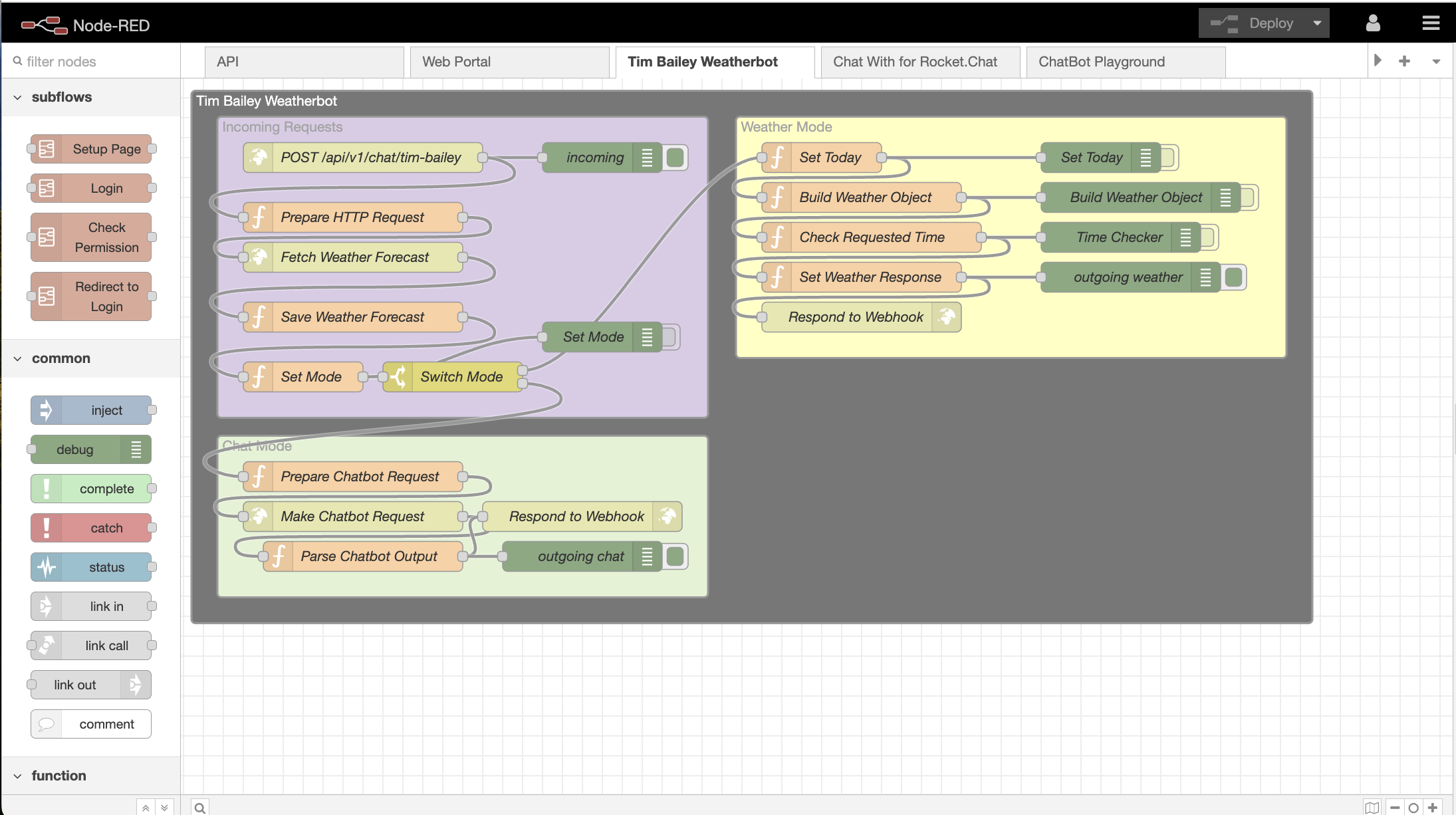

One more personal project that I built was the Tim Bailey Weather Chat-Bot, that I created in order to demonstrate my capabilities for an interview with a firm that specialised in Conversational AI. The bot is a standard back-and-forth chat bot with the personality of Tim Bailey from TV Network Ten's Weather segment of the news.

This chat bot is integrated with a free weather forecasting service API that allows you to ask many different questions related to the weather and to get accurate, up-to-date and relevant responses from "Tim".

Unfortunately I haven't tested this chat-bot recently so it may or may not work. If you would like to give it a go then you can do so directly via the API (check the Console link in the docs) or you can create a new user in Slack or Rocket Chat named "Tim Bailey" with an outgoing webhook pointed at the Tim Bailey Chat-Bot API (yes, click that to see the documentation for the API).

Personal: Chat w/ Anyone + Workshops

One of the earlier AI-powered projects that I worked on was "Chat with Anyone + Workshops", as part of the IndustrySwarm Genesis platform.

I created a conversational prompt within Genesis called "Chat With Anyone" that was exposed via an API endpoint. That endpoint allowed you to provide a short one or two word description / name for the AI persona that you wanted to chat with, as well as a longer "context" parameter. This endpoint was built so that you could directly interface with it from an Outgoing Rocket Chat Integration.

I added support for conversations happening in a DM (direct message) between the user and a single AI persona. And also added support for conversations happening within a Rocket Chat channel. That you could invite one or more AI personas to participate in. This was the "workshops" component. As the "real" (Non-AI) participant in the channel, you could firstly invite one or more AI personas to a Rocket Chat channel. And then secondly define a topic for the conversation, within that channel. And then you wait and watch whilst the AI personas in the channel start discussing the topic that you presented amongst one another.